The Illustrated BERT, ELMo, and co. (How NLP Cracked Transfer Learning) – Jay Alammar – Visualizing machine learning one concept at a time.

The Illustrated BERT, ELMo, and co. (How NLP Cracked Transfer Learning) – Jay Alammar – Visualizing machine learning one concept at a time.

![CW Paper-Club] BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding - YouTube CW Paper-Club] BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding - YouTube](https://i.ytimg.com/vi/uyNArsMBW5Q/maxresdefault.jpg)

CW Paper-Club] BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding - YouTube

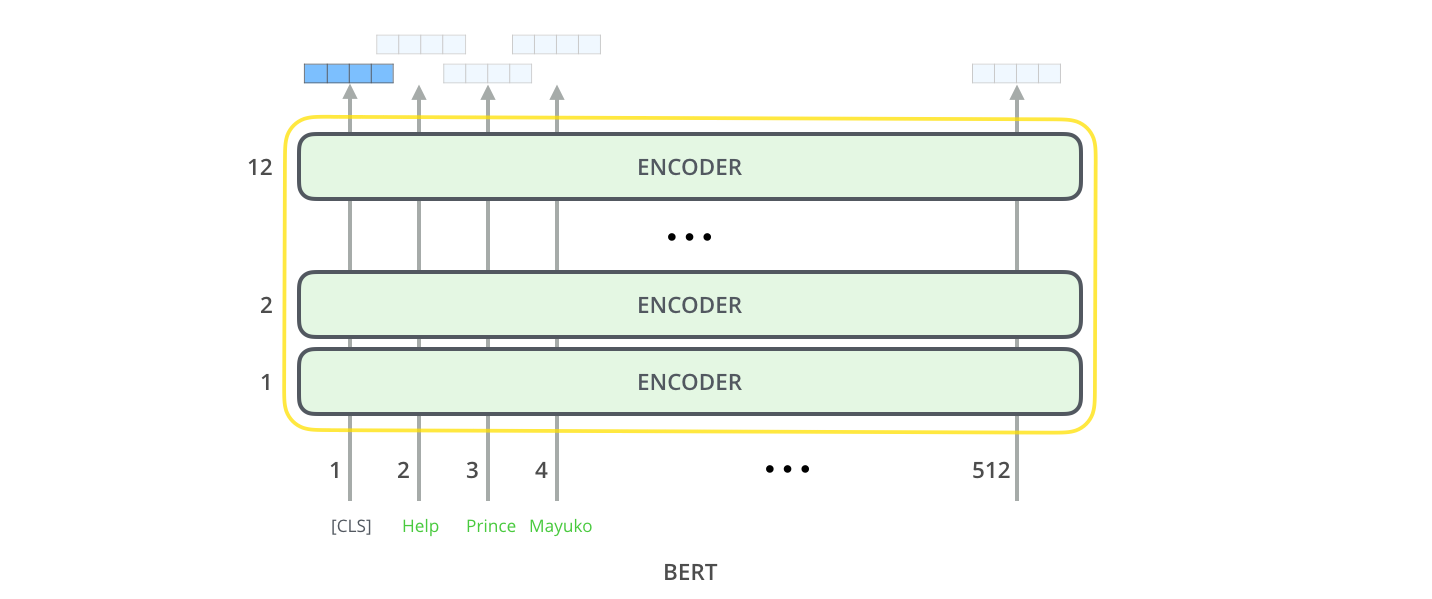

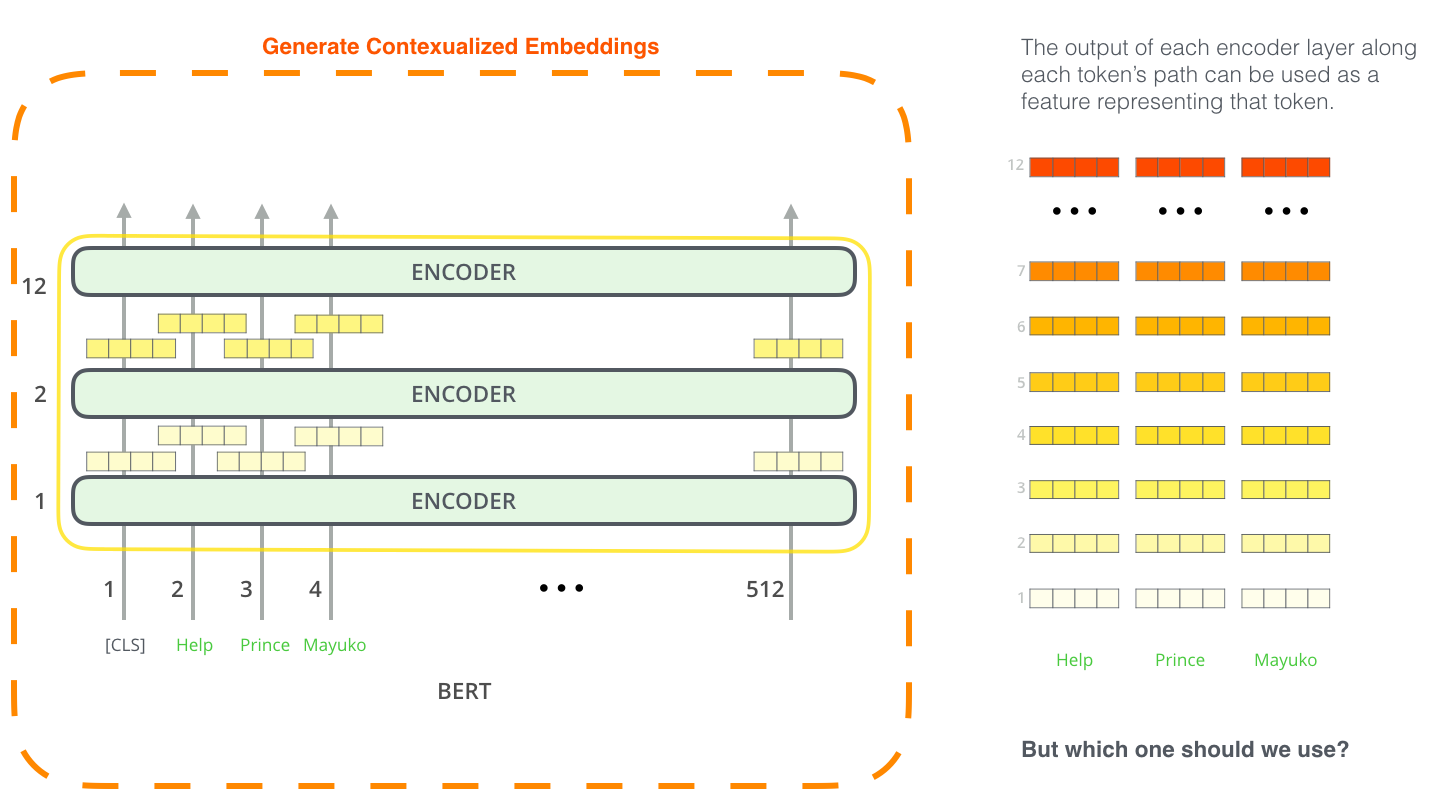

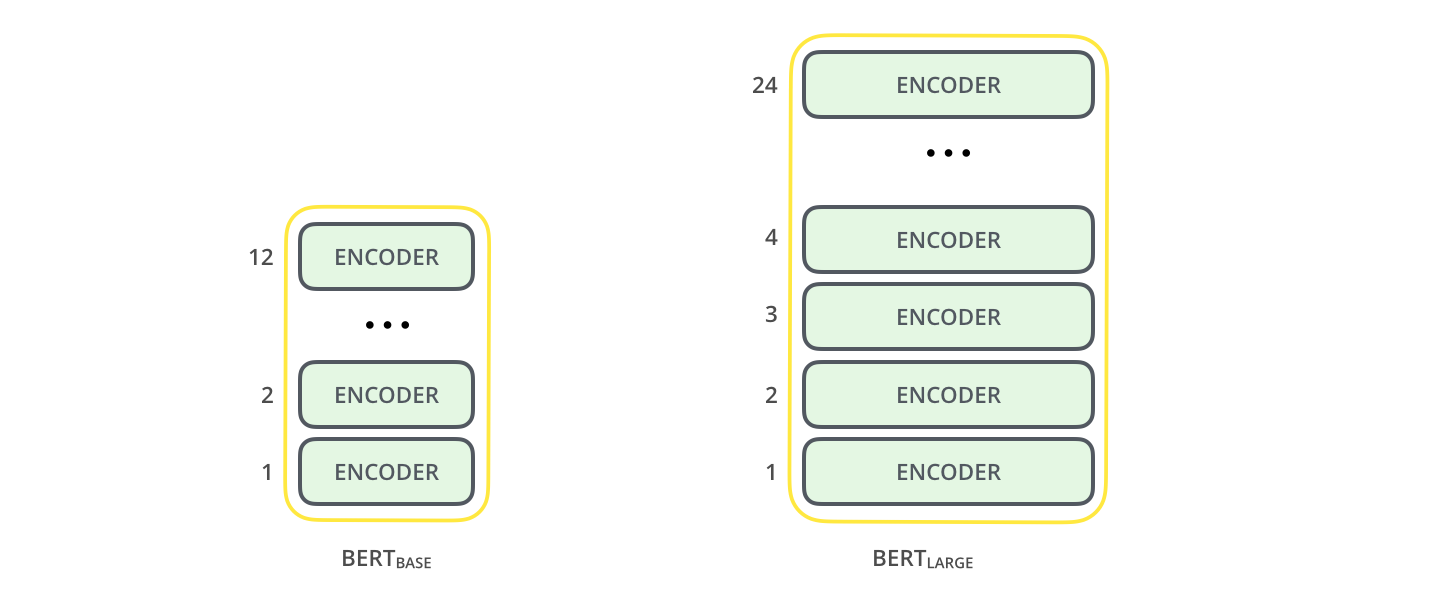

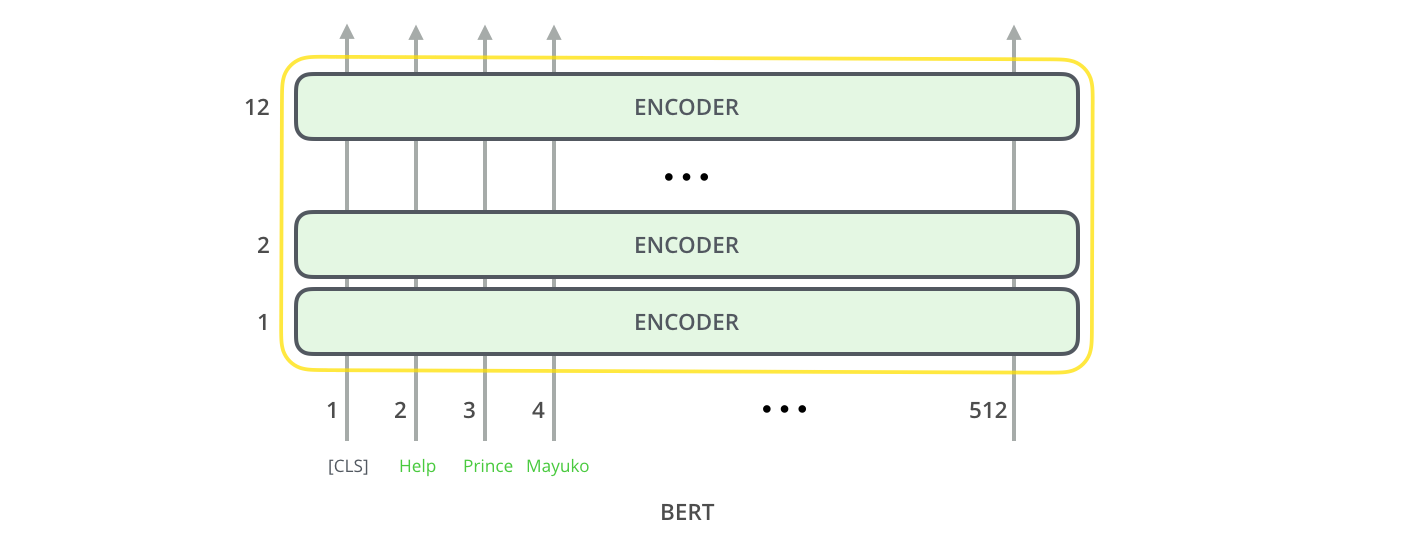

Different layers in Google BERT's architecture. (Reproduced from the... | Download Scientific Diagram

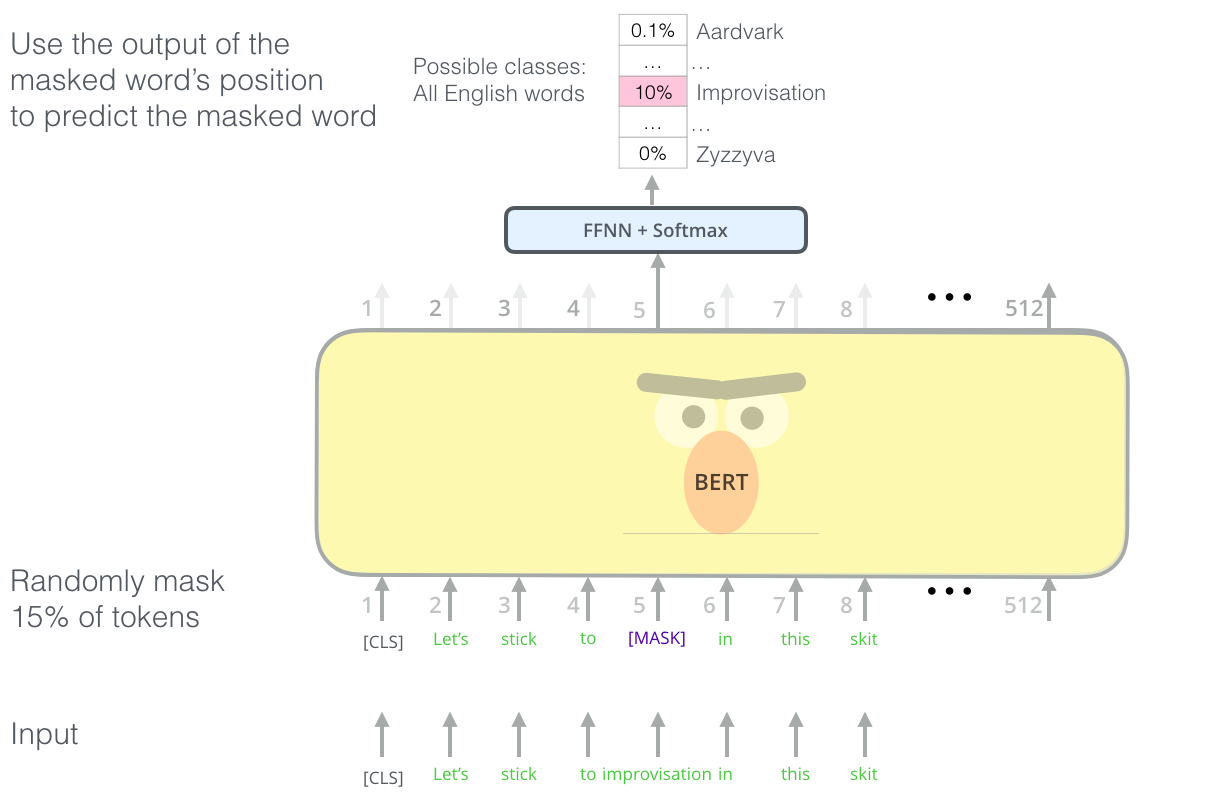

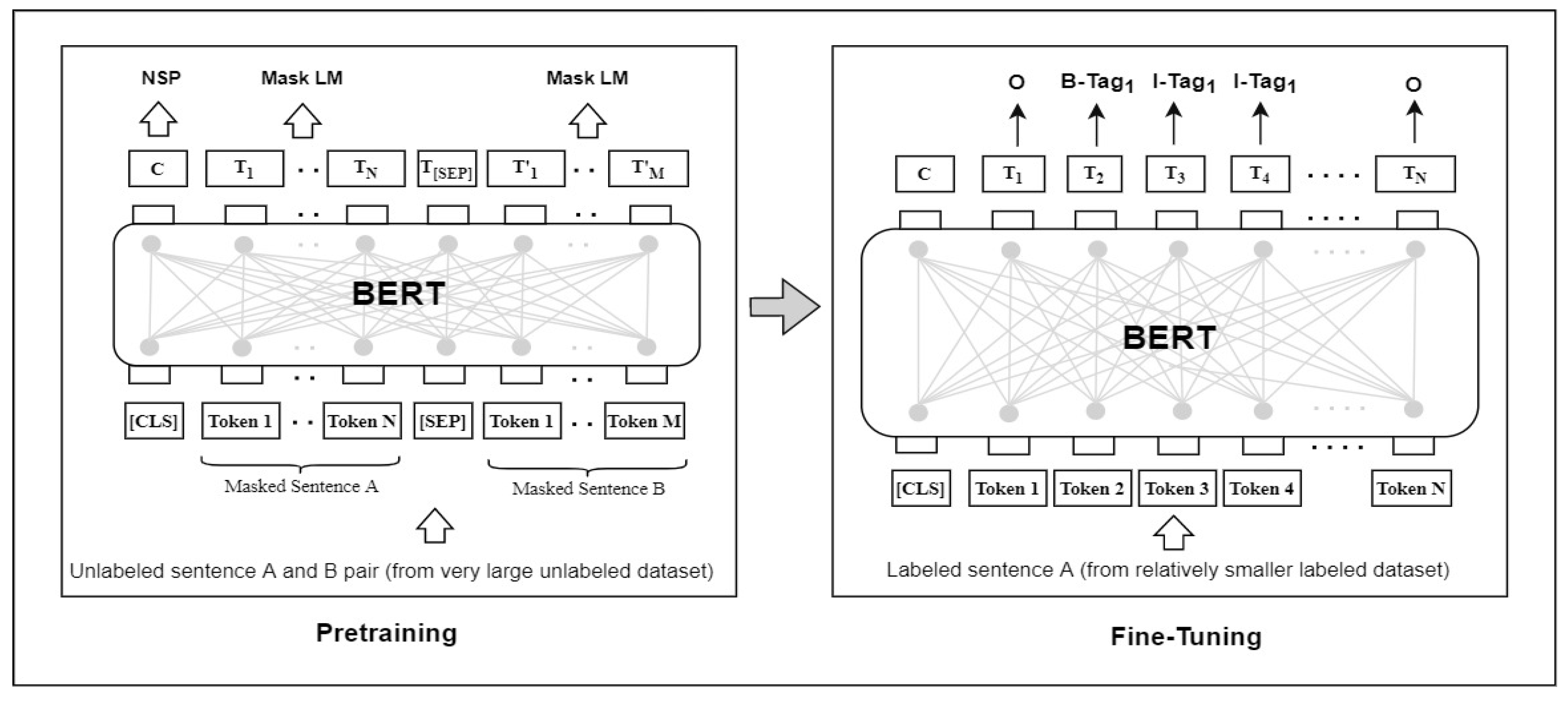

The Illustrated BERT, ELMo, and co. (How NLP Cracked Transfer Learning) – Jay Alammar – Visualizing machine learning one concept at a time.

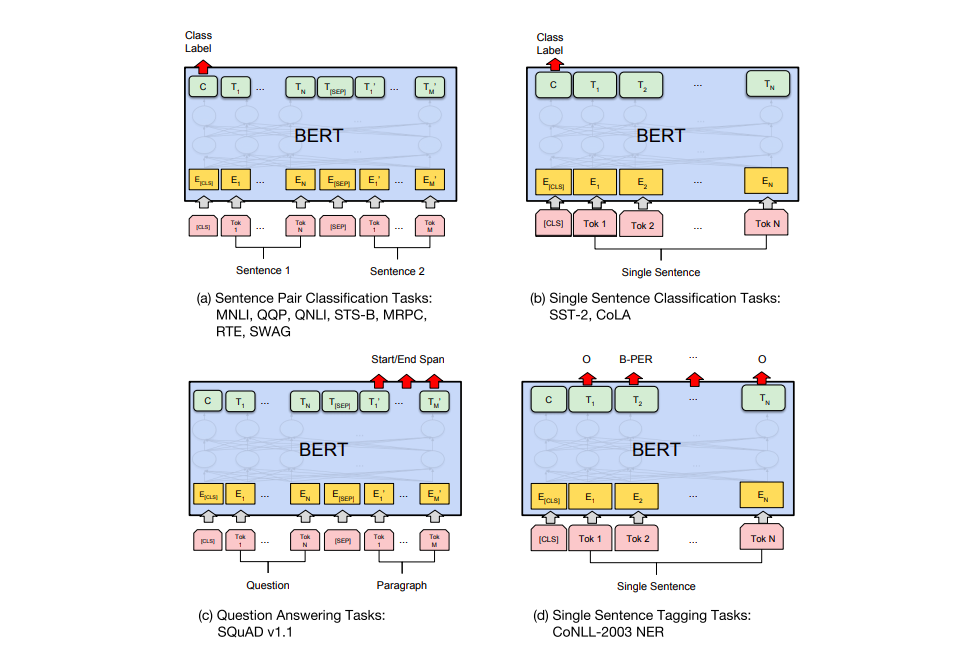

Paper summary — BERT: Bidirectional Transformers for Language Understanding | by Sanna Persson | Analytics Vidhya | Medium

The Illustrated BERT, ELMo, and co. (How NLP Cracked Transfer Learning) – Jay Alammar – Visualizing machine learning one concept at a time.

![PDF] BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding | Semantic Scholar PDF] BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/df2b0e26d0599ce3e70df8a9da02e51594e0e992/3-Figure1-1.png)

PDF] BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding | Semantic Scholar

The Illustrated BERT, ELMo, and co. (How NLP Cracked Transfer Learning) – Jay Alammar – Visualizing machine learning one concept at a time.

The Illustrated BERT, ELMo, and co. (How NLP Cracked Transfer Learning) – Jay Alammar – Visualizing machine learning one concept at a time.

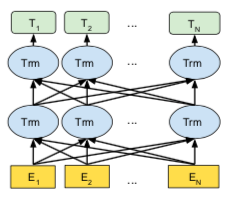

![PDF] A Recurrent BERT-based Model for Question Generation | Semantic Scholar PDF] A Recurrent BERT-based Model for Question Generation | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/f31f5412abd70531755bc3f93d4b838a36901ba0/3-Figure1-1.png)